GitHub Actions Integration with QA Sphere

Automatically upload test results from your GitHub Actions workflows to QA Sphere using the QAS CLI tool. This integration eliminates manual result entry and provides instant visibility into your automated test results.

What You'll Achieve

With this integration, every time your GitHub Actions workflow runs:

- Test results automatically upload to QA Sphere

- New test runs are created with workflow information

- Tests are matched to existing QA Sphere test cases

- Pass/fail status, execution time, and screenshots are recorded

- Test history and trends are tracked over time

Prerequisites

Before starting, ensure you have:

- A GitHub repository with automated tests (Playwright, Cypress, Jest, etc.)

- Tests configured to generate JUnit XML format results

- A QA Sphere account with Test Runner role or higher

- Test cases in QA Sphere with markers (e.g.,

BD-001,PRJ-123)

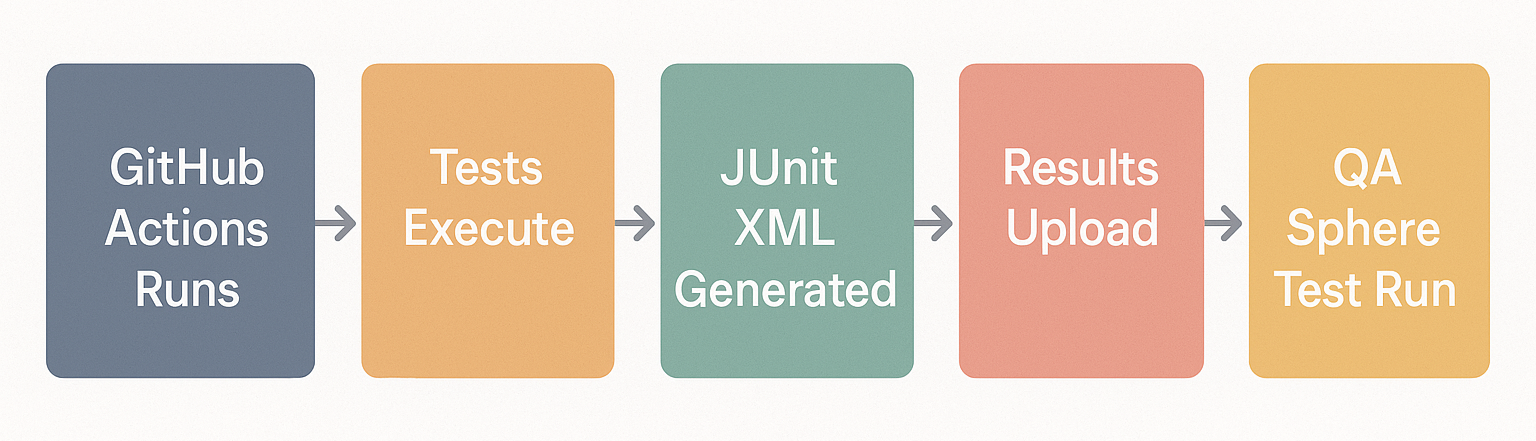

How It Works

- Your workflow runs automated tests

- Tests generate JUnit XML results file

- QAS CLI tool reads the XML file

- CLI matches tests to QA Sphere cases using markers

- Results are uploaded and appear in QA Sphere

Setup Steps

Step 1: Create QA Sphere API Key

- Log into your QA Sphere account

- Click the gear icon ⚙️ in the top right → Settings

- Navigate to API Keys

- Click Create API Key

- Copy and save the key - you won't see it again!

Your API key format: t123.ak456.abc789xyz

Step 2: Configure GitHub Secrets

Add these secrets to your GitHub repository:

- Go to your GitHub repository

- Navigate to Settings → Secrets and variables → Actions

- Click New repository secret and create:

| Name | Value |

|---|---|

QAS_TOKEN | Your API key (e.g., t123.ak456.abc789xyz) |

QAS_URL | Your QA Sphere URL (e.g., https://company.eu1.qasphere.com) |

- Click Add secret to save each one

Never commit API keys to your repository. Always use GitHub Secrets.

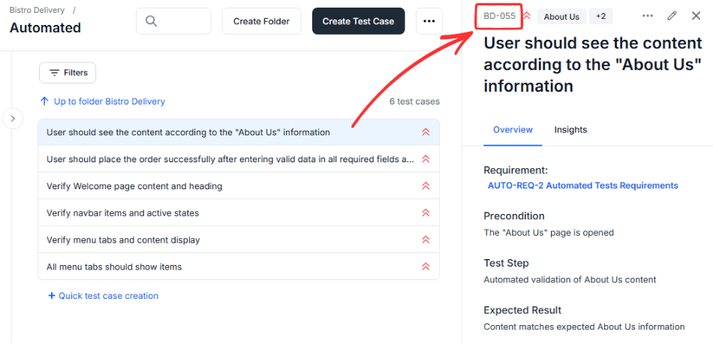

Step 3: Add Test Case Markers

Ensure your test names include QA Sphere markers in the format PROJECT-SEQUENCE:

These markers can be found in QA Sphere interface for each test case separately.

Playwright Example:

test('BD-001: User can login with valid credentials', async ({ page }) => {

await page.goto('https://example.com/login');

await page.fill('#username', '[email protected]');

await page.fill('#password', 'password123');

await page.click('#login-button');

await expect(page).toHaveURL('/dashboard');

});

test('BD-002: User sees error with invalid credentials', async ({ page }) => {

// test implementation

});

Cypress Example:

describe('Login Flow', () => {

it('BD-001: should login successfully with valid credentials', () => {

cy.visit('/login');

cy.get('#username').type('[email protected]');

cy.get('#password').type('password123');

cy.get('#login-button').click();

cy.url().should('include', '/dashboard');

});

});

Jest Example:

describe('API Tests', () => {

test('BD-015: GET /users returns user list', async () => {

const response = await fetch('/api/users');

expect(response.status).toBe(200);

const data = await response.json();

expect(data).toHaveLength(5);

});

});

Step 4: Configure Test Framework

Configure your test framework to generate JUnit XML output:

Playwright Configuration

// playwright.config.js

const { defineConfig } = require('@playwright/test');

module.exports = defineConfig({

testDir: './tests',

timeout: 30000,

// JUnit reporter for CI/CD

reporter: [

['list'], // Console output

['junit', { outputFile: 'junit-results/results.xml' }] // For QA Sphere

],

use: {

headless: true,

screenshot: 'only-on-failure',

video: 'retain-on-failure',

},

projects: [

{ name: 'chromium', use: { browserName: 'chromium' } },

{ name: 'firefox', use: { browserName: 'firefox' } },

{ name: 'webkit', use: { browserName: 'webkit' } },

],

});

Cypress Configuration

// cypress.config.js

const { defineConfig } = require('cypress');

module.exports = defineConfig({

e2e: {

reporter: 'cypress-multi-reporters',

reporterOptions: {

configFile: 'reporter-config.json'

}

}

});

// reporter-config.json

{

"reporterEnabled": "spec, mocha-junit-reporter",

"mochaJunitReporterReporterOptions": {

"mochaFile": "junit-results/results.xml"

}

}

Jest Configuration

// jest.config.js

module.exports = {

reporters: [

'default',

['jest-junit', {

outputDirectory: './junit-results',

outputName: 'results.xml',

classNameTemplate: '{classname}',

titleTemplate: '{title}'

}]

]

};

Step 5: Create GitHub Actions Workflow

Create a workflow file .github/workflows/qa-sphere-tests.yml in your repository:

For Playwright Projects

name: QA Sphere Integration

on:

push:

branches: [main, develop]

pull_request:

branches: [main, develop]

workflow_dispatch: # Allows manual triggering

env:

PLAYWRIGHT_VERSION: "1.51.1"

jobs:

test:

name: Run Playwright Tests

runs-on: ubuntu-latest

container:

image: mcr.microsoft.com/playwright:v1.51.1-jammy

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install dependencies

run: npm ci

- name: Run Playwright tests

run: npx playwright test

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results

path: junit-results/

retention-days: 7

- name: Upload Playwright report

uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report

path: playwright-report/

retention-days: 7

upload-to-qasphere:

name: Upload Results to QA Sphere

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/results.xml

- name: Summary

if: always()

run: echo "✅ Test results uploaded to QA Sphere"

For Cypress Projects

name: QA Sphere Integration

on:

push:

branches: [main, develop]

pull_request:

branches: [main, develop]

workflow_dispatch:

jobs:

test:

name: Run Cypress Tests

runs-on: ubuntu-latest

container:

image: cypress/browsers:node18.12.0-chrome107

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install dependencies

run: npm ci

- name: Run Cypress tests

run: npx cypress run

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results

path: junit-results/

retention-days: 7

- name: Upload videos

uses: actions/upload-artifact@v4

if: always()

with:

name: cypress-videos

path: cypress/videos/

retention-days: 7

- name: Upload screenshots

uses: actions/upload-artifact@v4

if: always()

with:

name: cypress-screenshots

path: cypress/screenshots/

retention-days: 7

upload-to-qasphere:

name: Upload Results to QA Sphere

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/results.xml

- name: Summary

run: echo "✅ Results uploaded to QA Sphere"

For Jest Projects

name: QA Sphere Integration

on:

push:

branches: [main, develop]

pull_request:

branches: [main, develop]

workflow_dispatch:

jobs:

test:

name: Run Jest Tests

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install dependencies

run: npm ci

- name: Run Jest tests

run: npm test

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results

path: junit-results/

retention-days: 7

- name: Upload coverage

uses: actions/upload-artifact@v4

if: always()

with:

name: coverage

path: coverage/

retention-days: 7

upload-to-qasphere:

name: Upload Results to QA Sphere

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/results.xml

- name: Summary

run: echo "✅ Results uploaded to QA Sphere"

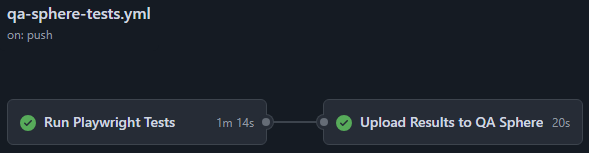

Step 6: Push and Verify

- Commit your changes:

git add .github/workflows/qa-sphere-tests.yml playwright.config.js # or your config files

git commit -m "Add GitHub Actions with QA Sphere integration"

git push origin main

-

Monitor the workflow:

- Go to GitHub → Actions tab

- Watch your workflow execute

- Check both

Run Playwright TestsandUpload Results to QA Spherejobs

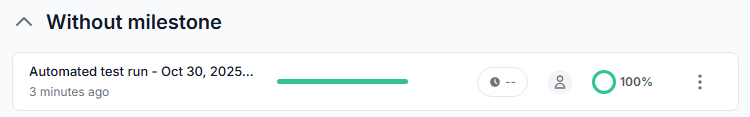

-

Verify in QA Sphere:

- Log into QA Sphere

- Navigate to your project → Test Runs

- See the new run with your test results

Advanced Usage

Available CLI Options

The QAS CLI junit-upload command creates a new test run within a QA Sphere project from your JUnit XML files or uploads results to an existing run.

qasphere junit-upload [options] <path-to-junit-xml>

Options:

-r, --run-url <url>- Upload to an existing test run (otherwise creates a new run)--run-name <template>- Name template for creating new test runs (only used when--run-urlis not specified)--attachments- Detect and upload attachments (screenshots, videos, logs)--force- Ignore API request errors, invalid test cases, or attachment issues-h, --help- Show command help

Run Name Template Placeholders

The --run-name option supports the following placeholders:

Environment Variables:

{env:VARIABLE_NAME}- Any environment variable (e.g.,{env:GITHUB_RUN_NUMBER},{env:GITHUB_SHA})

Date Placeholders:

{YYYY}- 4-digit year (e.g., 2025){YY}- 2-digit year (e.g., 25){MMM}- 3-letter month (e.g., Jan, Feb, Mar){MM}- 2-digit month (e.g., 01, 02, 12){DD}- 2-digit day (e.g., 01, 15, 31)

Time Placeholders:

{HH}- 2-digit hour in 24-hour format (e.g., 00, 13, 23){hh}- 2-digit hour in 12-hour format (e.g., 01, 12){mm}- 2-digit minute (e.g., 00, 30, 59){ss}- 2-digit second (e.g., 00, 30, 59){AMPM}- AM/PM indicator

Default Template:

If --run-name is not specified, the default template is:

Automated test run - {MMM} {DD}, {YYYY}, {hh}:{mm}:{ss} {AMPM}

Example Output:

Automated test run - Jan 15, 2025, 02:30:45 PM

The --run-name option is only used when creating new test runs (i.e., when --run-url is not specified).

Usage Examples:

# Create new run with default name template

qasphere junit-upload ./junit-results/results.xml

# Upload to existing run (--run-name is ignored)

qasphere junit-upload -r https://company.eu1.qasphere.com/project/BD/run/42 ./junit-results/results.xml

# Simple static name

qasphere junit-upload --run-name "v1.4.4-rc5" ./junit-results/results.xml

# With environment variables

qasphere junit-upload --run-name "Run #{env:GITHUB_RUN_NUMBER} - {env:GITHUB_REF_NAME}" ./junit-results/results.xml

# Output: "Run #12345 - main"

# With date placeholders

qasphere junit-upload --run-name "Release {YYYY}-{MM}-{DD}" ./junit-results/results.xml

# Output: "Release 2025-01-15"

# With date and time placeholders

qasphere junit-upload --run-name "Nightly Tests {MMM} {DD}, {YYYY} at {HH}:{mm}" ./junit-results/results.xml

# Output: "Nightly Tests Jan 15, 2025 at 22:34"

# Complex template with multiple placeholders

qasphere junit-upload --run-name "Build {env:BUILD_NUMBER} - {YYYY}/{MM}/{DD} {hh}:{mm} {AMPM}" ./junit-results/results.xml

# Output: "Build v1.4.4-rc5 - 2025/01/15 10:34 PM"

# With attachments

qasphere junit-upload --attachments ./junit-results/results.xml

# Multiple files

qasphere junit-upload ./junit-results/*.xml

# Force upload on errors

qasphere junit-upload --force ./junit-results/results.xml

Upload to Existing Test Run

To update a specific test run instead of creating a new one:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

RUN_ID=42

qasphere junit-upload \

-r ${QAS_URL}/project/BD/run/${RUN_ID} \

./junit-results/results.xml

Upload with Attachments

Include screenshots and logs with your results:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload --attachments ./junit-results/results.xml

The CLI automatically detects and uploads:

- Screenshots from test failures

- Video recordings

- Log files

- Any files referenced in the JUnit XML

Upload Multiple XML Files

If you have multiple test suites generating separate XML files:

- name: Upload to QA Sphere

run: qasphere junit-upload ./junit-results/*.xml

Branch-Specific Runs

Create different runs for different branches:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

if [ "${{ github.ref_name }}" = "main" ]; then

# Upload to production run

qasphere junit-upload -r ${QAS_URL}/project/BD/run/100 ./junit-results/results.xml

elif [ "${{ github.ref_name }}" = "develop" ]; then

# Upload to development run

qasphere junit-upload -r ${QAS_URL}/project/BD/run/101 ./junit-results/results.xml

else

# Create new run for feature branches

qasphere junit-upload ./junit-results/results.xml

fi

Add Workflow Metadata

Use the --run-name option to include GitHub workflow information in test run titles:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

qasphere junit-upload \

--run-name "Run #{env:GITHUB_RUN_NUMBER} - {env:GITHUB_REF_NAME}" \

./junit-results/results.xml

# Output: "Run #12345 - main"

Common GitHub Variables:

{env:GITHUB_RUN_NUMBER}- Workflow run number{env:GITHUB_REF_NAME}- Branch or tag name{env:GITHUB_SHA}- Commit SHA (full){env:GITHUB_ACTOR}- User who triggered the workflow{env:GITHUB_JOB}- Current job name{env:GITHUB_WORKFLOW}- Workflow name

Examples:

# Workflow with date and time

- run: qasphere junit-upload --run-name "Run #{env:GITHUB_RUN_NUMBER} - {YYYY}-{MM}-{DD} {HH}:{mm}" ./junit-results/results.xml

# Branch and commit info

- run: qasphere junit-upload --run-name "{env:GITHUB_REF_NAME} - {env:GITHUB_SHA}" ./junit-results/results.xml

# Complete metadata

- run: qasphere junit-upload --run-name "Build #{env:GITHUB_RUN_NUMBER} ({env:GITHUB_REF_NAME}) - {MMM} {DD}, {hh}:{mm} {AMPM}" ./junit-results/results.xml

Force Upload on Errors

Continue uploading even if some tests can't be matched:

- name: Upload to QA Sphere

run: qasphere junit-upload --force ./junit-results/results.xml

Common Scenarios

Scenario 1: Nightly Test Runs

Run tests on a schedule and upload results with descriptive names:

name: Nightly Tests

on:

schedule:

- cron: '0 2 * * *' # Run at 2 AM UTC daily

workflow_dispatch:

jobs:

test:

name: Run Nightly Tests

runs-on: ubuntu-latest

# ... test steps ...

upload-to-qasphere:

name: Upload Results

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

# Create run with date in the name

qasphere junit-upload --run-name "Nightly Tests - {YYYY}-{MM}-{DD}" ./junit-results/results.xml

# Output: "Nightly Tests - 2025-01-15"

# Or with time included

qasphere junit-upload --run-name "Nightly {MMM} {DD}, {YYYY} at {HH}:{mm}" ./junit-results/results.xml

# Output: "Nightly Jan 15, 2025 at 22:30"

Scenario 2: Parallel Test Execution

Run tests in parallel using matrix strategy and upload all results:

name: Parallel Tests

on:

push:

branches: [main, develop]

jobs:

test:

name: Test - ${{ matrix.suite }}

runs-on: ubuntu-latest

strategy:

matrix:

suite: [unit, integration, e2e]

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install dependencies

run: npm ci

- name: Run ${{ matrix.suite }} tests

run: npm run test:${{ matrix.suite }}

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results-${{ matrix.suite }}

path: junit-results/

retention-days: 7

upload-to-qasphere:

name: Upload Results to QA Sphere

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download all test results

uses: actions/download-artifact@v4

with:

pattern: junit-results-*

path: junit-results/

merge-multiple: true

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/*.xml

Scenario 3: Multi-Environment Testing

Test against different environments:

name: Multi-Environment Tests

on:

push:

branches: [main, develop]

jobs:

test:

name: Test - ${{ matrix.environment }}

runs-on: ubuntu-latest

strategy:

matrix:

environment: [staging, production]

include:

- environment: staging

base_url: https://staging.example.com

- environment: production

base_url: https://example.com

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install dependencies

run: npm ci

- name: Run tests

env:

BASE_URL: ${{ matrix.base_url }}

TEST_ENV: ${{ matrix.environment }}

run: npm test

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results-${{ matrix.environment }}

path: junit-results/

retention-days: 7

upload-to-qasphere:

name: Upload Results

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download all test results

uses: actions/download-artifact@v4

with:

pattern: junit-results-*

path: junit-results/

merge-multiple: true

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/*.xml

Scenario 4: Version/Release Tagging

Tag test runs with version numbers or release names:

name: Release Tests

on:

push:

tags:

- 'v*'

workflow_dispatch:

env:

VERSION: ${{ github.ref_name }}

jobs:

test:

name: Run Release Tests

runs-on: ubuntu-latest

# ... test steps ...

upload-to-qasphere:

name: Upload Results

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

# Simple version tag

qasphere junit-upload --run-name "Release {env:VERSION}" ./junit-results/results.xml

# Output: "Release v1.4.5"

# Version with date

qasphere junit-upload --run-name "Release {env:VERSION} - {YYYY}-{MM}-{DD}" ./junit-results/results.xml

# Output: "Release v1.4.5 - 2025-01-15"

For different handling of tags vs branches:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

if [ "${{ github.ref_type }}" = "tag" ]; then

# For git tags, use tag name

qasphere junit-upload --run-name "Release {env:GITHUB_REF_NAME}" ./junit-results/results.xml

else

# For regular commits, use branch and commit

SHORT_SHA=$(echo "${{ github.sha }}" | cut -c1-7)

qasphere junit-upload --run-name "{env:GITHUB_REF_NAME} - ${SHORT_SHA}" ./junit-results/results.xml

fi

Troubleshooting

Issue: Tests Not Appearing in QA Sphere

Symptoms:

- Upload succeeds but no results in QA Sphere

- "Test case not found" warnings in logs

Solutions:

-

Ensure test cases exist in QA Sphere:

- Check that

BD-001,BD-002, etc. exist in your QA Sphere project - Verify the project code matches (BD, PRJ, etc.)

- Check that

-

Check marker format:

- Must be

PROJECT-NUMBERformat - Examples:

BD-001,PRJ-123,TEST-456

- Must be

Issue: Authentication Failed (401 Error)

Symptoms:

Error: Authentication failed (401)

Solutions:

-

Verify API key is correct:

- Go to QA Sphere → Settings → API Keys

- Check the key hasn't been deleted

- Regenerate if needed

-

Check GitHub Secrets:

- Settings → Secrets and variables → Actions

- Verify

QAS_TOKENis set correctly - Ensure no extra spaces or line breaks

-

Verify key permissions:

- API key must have Test Runner role or higher

- Check user permissions in QA Sphere

Issue: JUnit XML File Not Found

Symptoms:

Error: File ./junit-results/results.xml does not exist

Solutions:

- Check artifact upload configuration:

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results

path: junit-results/ # Make sure this matches your output path

-

Verify test framework configuration:

- Playwright: Check

playwright.config.jsreporter - Cypress: Check

reporter-config.json - Jest: Check

jest.config.jsreporters

- Playwright: Check

-

Add debug output:

- name: Debug - List files

run: |

ls -la junit-results/

cat junit-results/results.xml

- name: Upload to QA Sphere

run: qasphere junit-upload ./junit-results/results.xml

Issue: Artifact Not Found

Symptoms:

Error: Unable to find any artifacts for the associated workflow

Solutions:

- Ensure artifact names match:

# In test job

- name: Upload test results

uses: actions/upload-artifact@v4

with:

name: junit-results # Must match

# In upload job

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results # Must match

- Check job dependencies:

upload-to-qasphere:

needs: test # Must reference the correct job name

if: always() # Run even if test job fails

Issue: Playwright Version Mismatch

Symptoms:

Error: Executable doesn't exist at /ms-playwright/chromium...

Solution:

Match Docker image version to your Playwright package version:

# Check your Playwright version

npm list @playwright/test

# Output: @playwright/[email protected]

# Update workflow file

jobs:

test:

container:

image: mcr.microsoft.com/playwright:v1.51.1-jammy # Match the version

Issue: Secrets Not Available

Symptoms:

Error: QAS_TOKEN environment variable is not set

Solutions:

-

Verify secrets are defined:

- Go to repository Settings → Secrets and variables → Actions

- Ensure

QAS_TOKENandQAS_URLexist

-

Check secret usage in workflow:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }} # Correct syntax

QAS_URL: ${{ secrets.QAS_URL }}

run: qasphere junit-upload ./junit-results/results.xml

- For organization secrets:

- Ensure repository has access to organization secrets

- Check secret visibility settings

Issue: Workflow Doesn't Trigger

Symptoms:

- Push code but workflow doesn't run

- Workflow file exists but not visible in Actions tab

Solutions:

- Verify workflow file location:

.github/workflows/qa-sphere-tests.yml ✅ Correct

.github/workflow/qa-sphere-tests.yml ❌ Wrong (missing 's')

github/workflows/qa-sphere-tests.yml ❌ Wrong (missing '.')

- Check YAML syntax:

# Validate YAML locally

npx js-yaml .github/workflows/qa-sphere-tests.yml

- Verify trigger configuration:

on:

push:

branches: [main, develop] # Check branch names match

pull_request:

branches: [main, develop]

- Check branch protection rules:

- Repository Settings → Branches

- Ensure Actions aren't blocked by branch protection

Best Practices

1. Always Use Markers

Include QA Sphere markers in all automated tests:

// ✅ Good

test('BD-001: User can login successfully', async ({ page }) => {});

// ❌ Bad - no marker

test('User can login successfully', async ({ page }) => {});

2. Upload on Every Workflow Run

Configure upload to run even when tests fail:

upload-to-qasphere:

needs: test

if: always() # Run even if test job fails

This ensures you track both passing and failing test results.

3. Use Descriptive Run Names

Use the --run-name option to create meaningful test run titles:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

qasphere junit-upload \

--run-name "Run #{env:GITHUB_RUN_NUMBER} - {env:GITHUB_REF_NAME}" \

./junit-results/results.xml

For branch-specific runs, you can also upload to existing runs:

- name: Upload to QA Sphere

run: |

if [ "${{ github.ref_name }}" = "main" ]; then

# Upload to production run

qasphere junit-upload -r ${QAS_URL}/project/BD/run/100 ./junit-results/results.xml

elif [ "${{ github.ref_name }}" = "develop" ]; then

# Upload to development run

qasphere junit-upload -r ${QAS_URL}/project/BD/run/101 ./junit-results/results.xml

else

# Create new run for feature branches

SHORT_SHA=$(echo "${{ github.sha }}" | cut -c1-7)

qasphere junit-upload --run-name "{env:GITHUB_REF_NAME} - ${SHORT_SHA}" ./junit-results/results.xml

fi

4. Secure Your API Keys

- ✅ Store in GitHub Secrets

- ✅ Use repository or organization secrets

- ✅ Rotate keys periodically

- ❌ Never commit to repository

- ❌ Never log or print in workflow

5. Upload Attachments for Failures

Help debug failures by including screenshots:

- name: Upload to QA Sphere

run: qasphere junit-upload --attachments ./junit-results/results.xml

6. Match Playwright Versions

Always keep Docker image version in sync with npm package:

// package.json

{

"devDependencies": {

"@playwright/test": "1.51.1"

}

}

# .github/workflows/qa-sphere-tests.yml

jobs:

test:

container:

image: mcr.microsoft.com/playwright:v1.51.1-jammy

7. Use Caching for Faster Builds

Cache dependencies to speed up workflow runs:

- name: Cache node modules

uses: actions/cache@v3

with:

path: |

~/.npm

node_modules

key: ${{ runner.os }}-node-${{ hashFiles('**/package-lock.json') }}

restore-keys: |

${{ runner.os }}-node-

8. Use Concurrency Controls

Prevent redundant workflow runs for the same branch:

concurrency:

group: ${{ github.workflow }}-${{ github.ref }}

cancel-in-progress: true

9. Test Locally First

Before pushing to GitHub, test the integration locally:

# Set environment variables

export QAS_TOKEN=your.api.key

export QAS_URL=https://company.eu1.qasphere.com

# Run tests

npm test

# Upload results

npx qas-cli junit-upload ./junit-results/results.xml

10. Monitor Upload Success

Add error handling to track upload status:

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

if qasphere junit-upload ./junit-results/results.xml; then

echo "✅ Successfully uploaded results to QA Sphere"

else

echo "❌ Failed to upload results to QA Sphere"

exit 1

fi

11. Set Appropriate Artifact Retention

Balance storage costs with retention needs:

- name: Upload test results

uses: actions/upload-artifact@v4

with:

name: junit-results

path: junit-results/

retention-days: 7 # Adjust based on your needs (1-90 days)

Complete Working Example

Here's a complete, production-ready configuration:

# .github/workflows/qa-sphere-tests.yml

name: QA Sphere Integration

on:

push:

branches: [main, develop]

pull_request:

branches: [main, develop]

workflow_dispatch:

# Prevent redundant runs for the same branch

concurrency:

group: ${{ github.workflow }}-${{ github.ref }}

cancel-in-progress: true

env:

PLAYWRIGHT_VERSION: "1.51.1"

jobs:

test:

name: Run Playwright Tests

runs-on: ubuntu-latest

container:

image: mcr.microsoft.com/playwright:v1.51.1-jammy

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Cache node modules

uses: actions/cache@v3

with:

path: node_modules

key: ${{ runner.os }}-node-${{ hashFiles('**/package-lock.json') }}

- name: Install dependencies

run: npm ci

- name: Run Playwright tests

run: npx playwright test

continue-on-error: true

- name: Upload test results

uses: actions/upload-artifact@v4

if: always()

with:

name: junit-results

path: junit-results/

retention-days: 7

- name: Upload test artifacts

uses: actions/upload-artifact@v4

if: always()

with:

name: test-artifacts

path: |

test-results/

playwright-report/

retention-days: 7

upload-to-qasphere:

name: Upload Results to QA Sphere

runs-on: ubuntu-latest

needs: test

if: always()

steps:

- name: Download test results

uses: actions/download-artifact@v4

with:

name: junit-results

path: junit-results/

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

cache: 'npm'

- name: Install QAS CLI

run: npm install -g qas-cli

- name: Verify results file exists

run: test -f junit-results/results.xml || (echo "Results file not found" && exit 1)

- name: Upload to QA Sphere

env:

QAS_TOKEN: ${{ secrets.QAS_TOKEN }}

QAS_URL: ${{ secrets.QAS_URL }}

run: |

SHORT_SHA=$(echo "${{ github.sha }}" | cut -c1-7)

qasphere junit-upload \

--run-name "Run #{env:GITHUB_RUN_NUMBER} - {env:GITHUB_REF_NAME} (${SHORT_SHA})" \

--attachments \

./junit-results/results.xml

- name: Workflow Summary

if: always()

run: |

echo "## QA Sphere Upload Summary" >> $GITHUB_STEP_SUMMARY

echo "✅ Test results uploaded to QA Sphere" >> $GITHUB_STEP_SUMMARY

echo "**View results:** ${{ secrets.QAS_URL }}/project/BD/runs" >> $GITHUB_STEP_SUMMARY

echo "**Workflow:** Run #${{ github.run_number }}" >> $GITHUB_STEP_SUMMARY

echo "**Branch:** ${{ github.ref_name }}" >> $GITHUB_STEP_SUMMARY

echo "**Commit:** ${{ github.sha }}" >> $GITHUB_STEP_SUMMARY

Additional Resources

- QA Sphere CLI Documentation - Playwright Integration

- QA Sphere CLI Documentation - WebdriverIO Integration

- QA Sphere API Documentation

- Authentication Guide

- GitHub Actions Documentation

- Playwright Documentation

Getting Help

If you encounter issues:

- Check the Troubleshooting section above

- Review workflow logs in GitHub Actions

- Test CLI locally with same configuration

- Check GitHub Actions workflow syntax

- Contact QA Sphere support: [email protected]

Summary: You now have everything you need to integrate QA Sphere with GitHub Actions. The QAS CLI tool automatically handles test result uploads, making test management seamless and automated. Every workflow run will now update QA Sphere with the latest test results.